The Quest to Make AIs Truly Intelligent

Artificial Intelligence, for all of its recent wins against human, is still somewhat of a misnomer. While AIs are becoming ridiculously good at specific tasks, they're still a far cry from what humans would consider "intelligent".

There're many ways one can describe intelligence and what it encompasses, but one school of thought defines intelligence as the ability to flexibly tackle new problems, based on past experiences and logical reasoning.

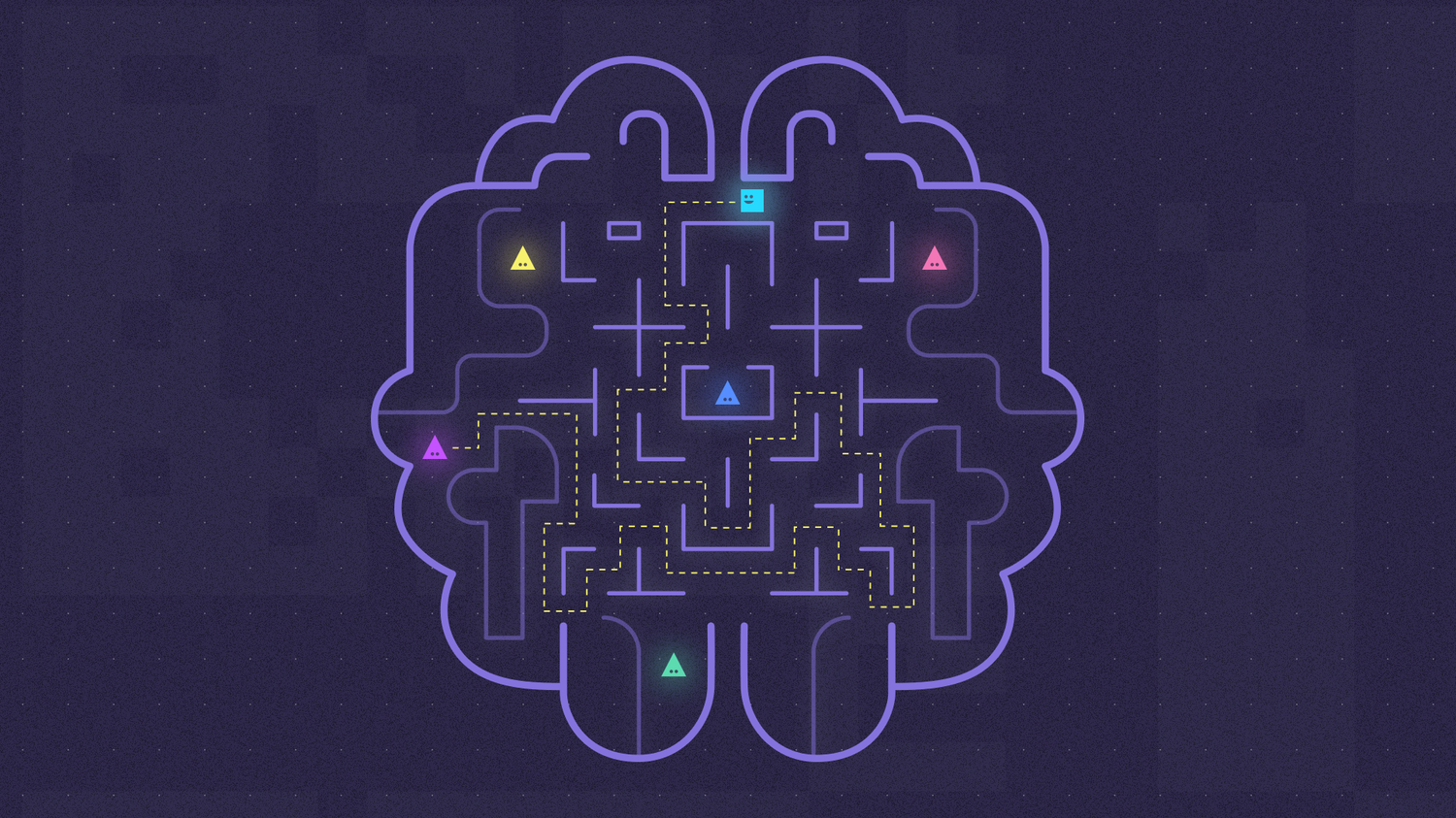

The problem with our current AIs is that they're one-trick ponies. This is partly due to how they're trained. AIs--or rather, the machine learning neural networks that power them--focus on a single problem, for example, how to recognize cats in an image.

Artificial neural networks are loosely based on their biological counterparts churning away in our brains. The networks learns through millions and millions of examples, each fine-tuning the connections between "neurons" so that the network arrives at the perfect solution. It's like fine-tuning a guitar.

The problem is that once an AI agent is trained on a single task, it's stuck with it. To relearn another task, everything has to reset, and the AI looses any of its previous learning. In other words, AIs need to have a way to call upon previous experience--they need a memory.

I've recently written about two ways that Google's mysterious DeepMind is going about the problem. One idea is to fashion a multi-layer ("deep") artificial neural network after a traditional computer. These systems combine a neural network with a separate memory module that sort of resembles a computer's RAM. The neural network is still trained using datasets; however, the difference is that while it learns, it chooses if and where to store each bit of information in the memory module and link associated pieces in the order that they were stored.

Put simply, the algorithm killed two birds in one stone: it built a knowledge base to draw upon, and mapped out a knowledge graph of patterns between each bit of information in the base. Because of this extra memory module, the program could tackle multi-step reasoning problems that baffled traditional neural networks.

This solution is slightly clunky, because it requires a separate memory module and a ton of back-and-forth communication between processor and memory, which increases energy consumption and delays speed.

Another idea is to directly store the information within the strength of the connections between the artificial neurons themselves--in other words, very similar to how our brains store information.

The synapses--the connections between neurons--not only allow us to input information, but also physically change in order to store that data (generally by tweaking the structure of little protrusions called spines, which house neurochemical receptors).

In this system, after learning a task (playing an Atari game) the AI pauses and tries to figure out which connections were the most helpful for the task and strengthens those. That is, it makes those useful connections harder to change as it subsequently learned to play another game. The trick worked pretty well, at least in terms of learning a series of Atari games.

The end goal, of course, is not engineering AIs that beat people at games--it's to make AIs with so-called "general intelligence", the kind of fluid reasoning that come to humans (relatively) easy. Computer scientists have been dreaming of general AI since the inception of the idea of "thinking machines", and there has certainly been stumbling blocks. Whether these new studies are taking the field to in a correct direction remains to be seen.